OcclusionFusion:

Occlusion-aware Motion Estimation for Real-time Dynamic 3D Reconstruction

Wenbin Lin, Chengwei Zheng, Jun-Hai Yong, Feng Xu

Tsinghua University

CVPR 2022

Wenbin Lin, Chengwei Zheng, Jun-Hai Yong, Feng Xu

Tsinghua University

CVPR 2022

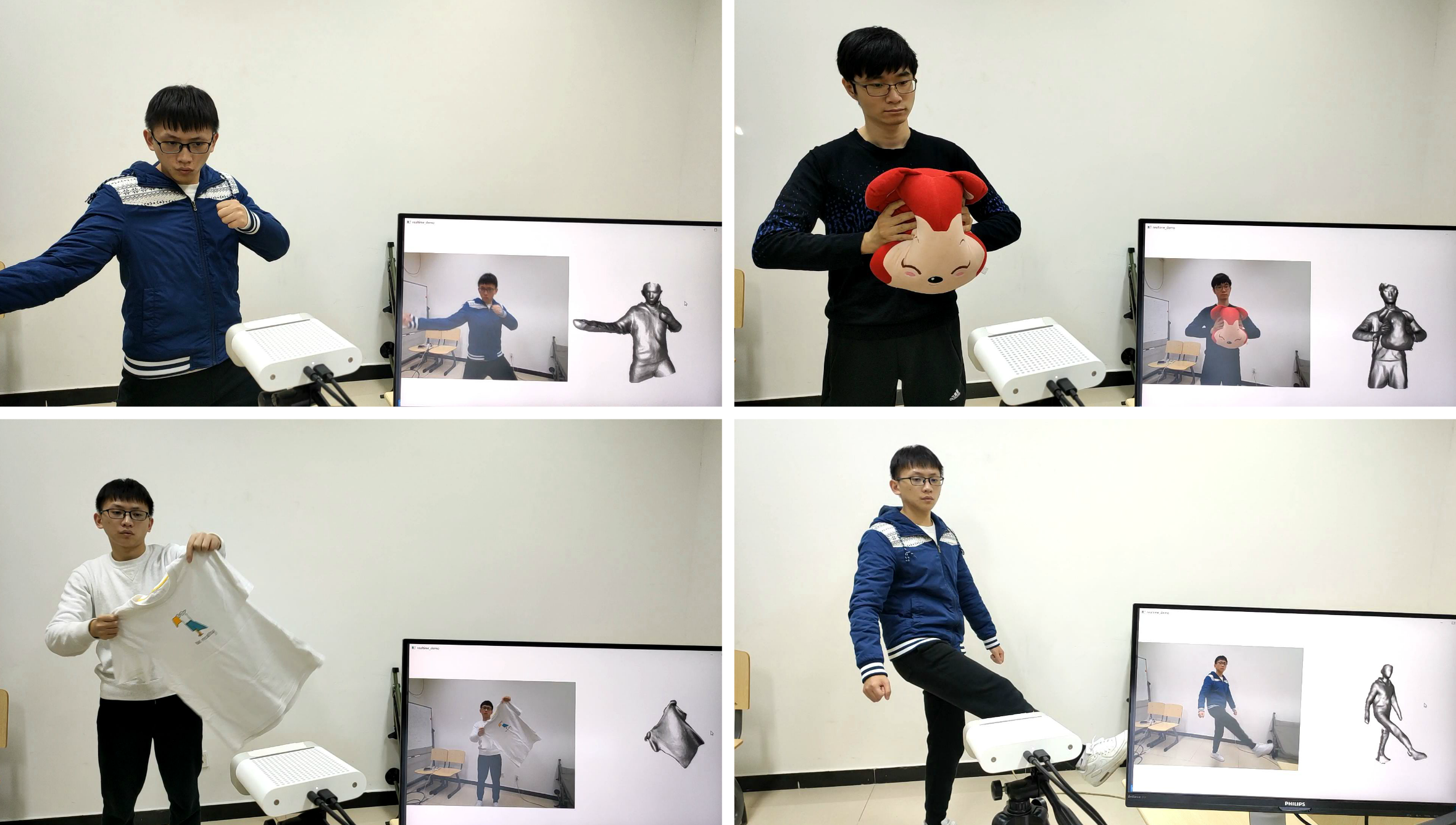

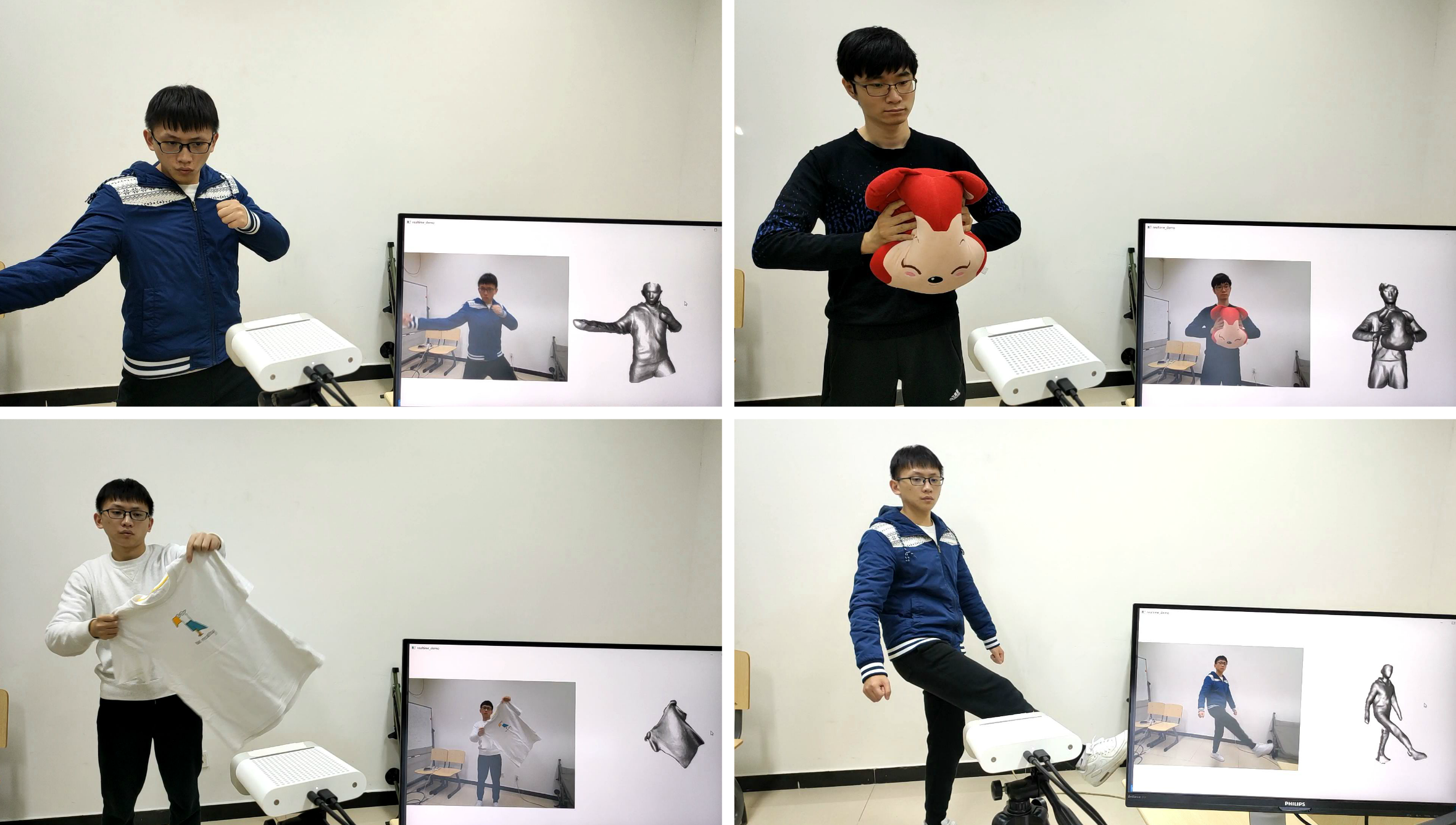

RGBD-based real-time dynamic 3D reconstruction suffers from inaccurate inter-frame motion estimation as errors may accumulate with online tracking. This problem is even more severe for single-view-based systems due to strong occlusions. Based on these observations, we propose OcclusionFusion, a novel method to calculate occlusion-aware 3D motion to guide the reconstruction. In our technique, the motion of visible regions is first estimated and combined with temporal information to infer the motion of the occluded regions through an LSTM-involved graph neural network. Furthermore, our method computes the confidence of the estimated motion by modeling the network output with a probabilistic model, which alleviates untrustworthy motions and enables robust tracking. Experimental results on public datasets and our own recorded data show that our technique outperforms existing single-view-based real-time methods by a large margin. With the reduction of the motion errors, the proposed technique can handle long and challenging motion sequences.

@inproceedings{lin2022occlusionfusion,

title={OcclusionFusion: Occlusion-aware Motion Estimation for Real-time Dynamic 3D Reconstruction},

author={Wenbin Lin, Chengwei Zheng, Jun-Hai Yong, Feng Xu},

journal={Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2022}

}

This work was supported by Beijing Natural Science Foundation (JQ19015), the NSFC (No.61727808, 62021002), the National Key R&D Program of China (2018YFA0704000, 2019YFB1405703) and TC190A4DA/3. This work was supported by THUIBCS, Tsinghua University and BLBCI, Beijing Municipal Education Commission. Jun-Hai Yong and Feng Xu are corresponding authors.